Datasets and Competitions

Our research team develops and shares large, publicly available datasets to advance learning analytics. We apply natural language processing (NLP) techniques to analyze both our own and external datasets, driving insights into educational practices. To encourage global participation, we host competitions that challenge talent worldwide to explore new approaches in learning analytics. Additionally, we leverage these datasets to fine-tune and adapt language models for educational interventions, enhancing their effectiveness and impact.

Datasets

The competition dataset comprises about 24000 student-written argumentative essays. Each essay was scored on a scale of 1 to 6. Your goal is to predict the score an essay received from its text.

The dataset consists of approximately 22,000 essays with surrogate identifiers replacing original PII, aimed at PII annotation, with 70% reserved for testing and external data encouraged for training.

dataset comprises about 5000 logs of user inputs, such as keystrokes and mouse clicks, taken during the composition of an essay.

The dataset comprises about 10,000 essays, some written by students and some generated by a variety of large language models (LLMs).

This dataset contains around 24,000 student summaries from grades 3-12, scored on content and wording, for a competition aiming to predict similar scores on unseen topics.

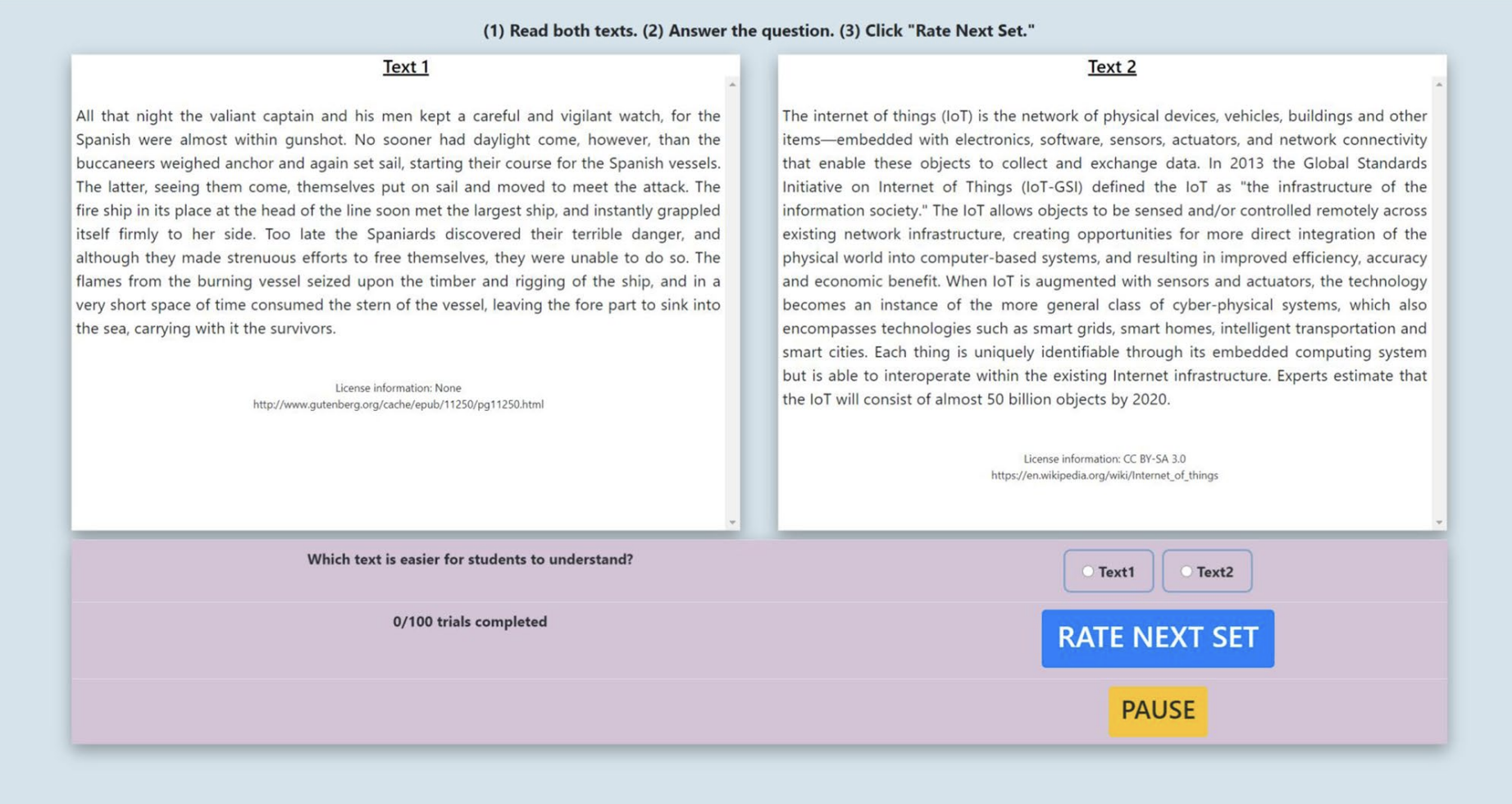

CLEAR provides unique readability scores for ~5,000 excerpts leveled for 3rd-12th grade readers along with information about the excerpts’ year of publishing, genre, and other meta-data.

PERSUADE is an open-source corpus comprising over 25,000 essays annotated for argumentative and discourse elements and relationships between these elements. The corpus includes holistic quality scores for each essay and for each argumentative and discourse element. Lastly, PERSUADE includes detailed demographic information for the writers. Kaggle competition 2021: Feedback Prize - Evaluating Student Writing

ELLIPSE comprises ~7,000 essays written by English Language Learners (ELL). The essays were written on 29 different independent prompts that required no background knowledge on the part of the writer. Individual difference information is made available for each essay, including economic status, gender, grade level (8-12), and race/ethnicity. Each essay was scored by two normed human raters for English language proficiency including an overall score of English proficiency and analytic scores for cohesion, syntax, vocabulary, phraseology, grammar, and conventions.

Competitions